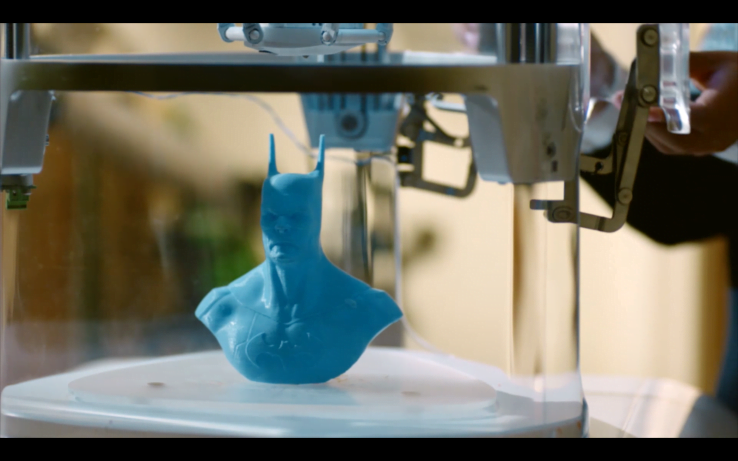

As 3D printers grow smarter and continue to embed themselves in manufacturing and product creation processes, they are exposed to online malefactors just like every other device and network. Security researchers suggest a way to prevent hackers from sabotaging the outputs of 3D printers: listen very, very carefully.

Now, you’re forgiven if someone hacking a 3D printer doesn’t strike you as a particularly egregious threat. But they really are starting to be used for more than hobby and prototyping purposes: prosthetics are one common use, and improved materials have made automotive and aerospace applications possible.

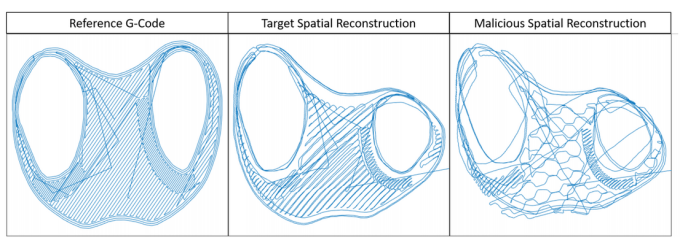

The problem, as some security researchers have already demonstrated, is that a hacker could take over the machine and not merely shut it down but introduce flaws into the printed objects themselves. All it takes is a few small air gaps, a misalignment of internal struts or some such tweak, and all of a sudden the part rated to hold 75 pounds only holds 20. That could be catastrophic in many circumstances.

And of course the sabotaged parts may look identical to ordinary ones to the naked eye. What to do?

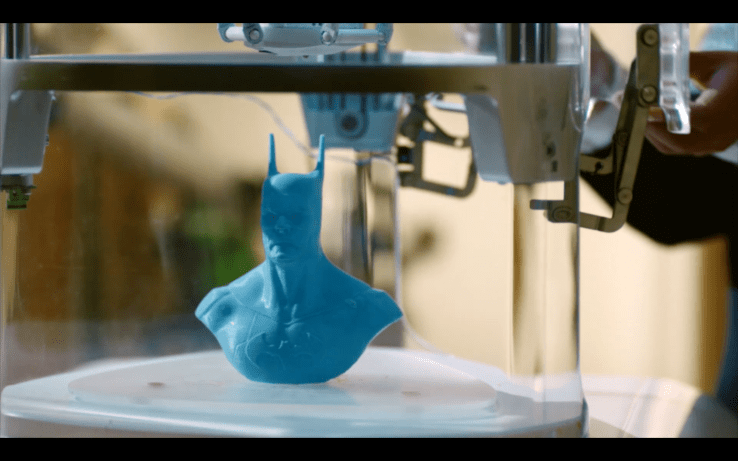

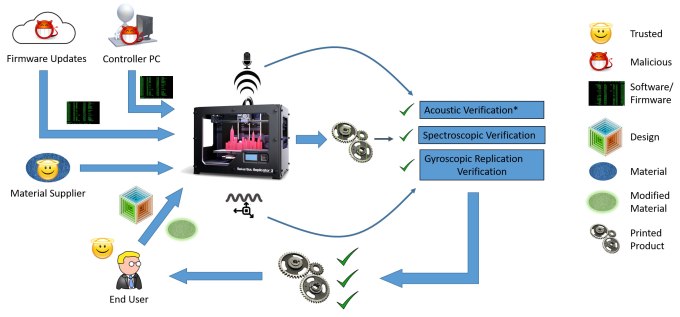

A team from Rutgers and Georgia Tech suggests three methods, one of which is easy and clever enough to integrate widely — a bit like Shazam for 3D printing. (The other two are still cool.)

I don’t know if you’ve ever been next to a printer while it works, but it makes a racket. That’s because many 3D printers use a moving print head and various other mechanical parts, all of which produce the usual whines, clicks and other noises.

I don’t know if you’ve ever been next to a printer while it works, but it makes a racket. That’s because many 3D printers use a moving print head and various other mechanical parts, all of which produce the usual whines, clicks and other noises.

The researchers recorded those noises while a reference print was being made, then fed that noise in bits to an algorithm that classifies sound so it can be recognized again.

When a new print is done, the sound is recorded again and submitted for inspection by the algorithm. If it’s the same all the way through, chances are the print hasn’t been tampered with. Any significant variation from the original sound, such as certain operations ending too fast or anomalous peaks in the middle of normally flat sections, will be picked up by the system and the print flagged.

It’s just a proof of concept, so there’s still room for improvement, lowering false positives and raising resistance to ambient noise.

Or the acoustic verification could be combined with other measures the team suggested. One requires the print head to be equipped with a sensor that records all its movements. If these differ from a reference motion path, boom, flagged.

Or the acoustic verification could be combined with other measures the team suggested. One requires the print head to be equipped with a sensor that records all its movements. If these differ from a reference motion path, boom, flagged.

The third method impregnates the extrusion material with nanoparticles that give it a very specific spectroscopic signature. If other materials are used instead, or air gaps left in the print, the signature will change and, you guessed it, the object flagged.

Like with the DNA-based malware vector, the hacks and countermeasures proposed here are speculative right now, but it’s never too early to start thinking about them.

“You’ll see more types of attacks as well as proposed defenses in the 3D printing industry within about five years,” said Saman Aliari Zonouz, co-author of the study (PDF), in a Rutgers news release.

And like the DNA research, this paper was presented at the USENIX Security Symposium.

[“Source-techcrunch”]